What Robbie the Robot can teach us about AI

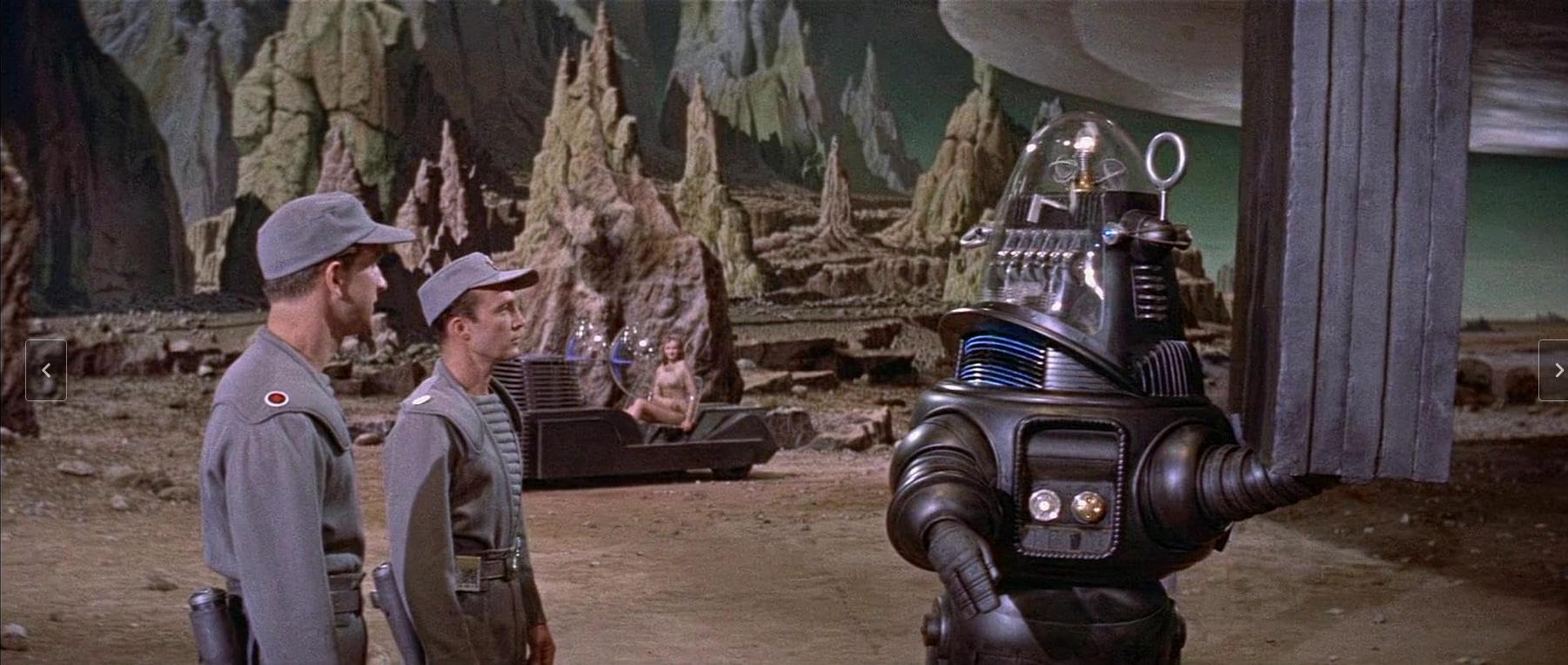

Way back in 1956, when I was ten years old, my parents took my brother and I to a drive-in movie theater to see the now classic, Forbidden Planet. I think Forbidden Planet is one of the great Sci-Fi movies of all time.

The story

Set on the planet Altair IV, the United Planets starship C-57D has been sent to determine the fate a previous expedition and return any survivors to Earth.

In addition to Anne Francis as Altaira, Walter Pidgeon as Dr. Morbius, and Leslie Nielsen as Commander John J. Adams, the film features Robbie the Robot. Robbie is not your average robot — certainly not for the average science fiction thriller of the 1950’s. Robbie can speak hundreds of Earth languages, synthesize and transport 10 tons of “isotope 217” to the starship’s landing site, design and fabricate garments for Altaira, and cook gourmet meals.

Robbie even has built-in restrictions against harming humans similar to Isaac Asimov’s Three Laws of Robotics. This results in an ethical conundrum for the robot as the film reaches its conclusion.

Robbie is an artificial intelligence with both personality and sense of humor. Robbie is my touchstone for determining the quality of today’s so-called AI. If your code doesn’t reach the high bar set by Robbie, it’s still just clever code and not true AI.

The junk we currently call AI

Most of us have Siri, Alexa, or some other tool that can assist us in certain tasks. Have you ever asked Alexa, “Alexa, what are you?” I have. She claims to be an AI.

We have an Amazon TV cube connected to the receiver which is the hub of our home theater system. I can use voice commands to turn on the devices in the system, locate and play programs we want to watch, get the weather report, and shut everything off. However, when I try to give it a sequence of multiple tasks in a single command, it always fails. We hear things like, “I don’t know how do that,” reminiscent of HAL in “2001 — A Space Odyssey,” or, “I don’t understand that.” Other failures include taking us to the page where we need to type in a search string, or to a list of TV shows, movies, books, and other items that bear no resemblance whatsoever to the thing we were trying to get it to play.

Using AI to generate images or documents seems to be a big thing, but is not without its pitfalls. I’ve seen many images and documents that are clearly AI generated and, while the images are interesting and can be beautiful they are, at best, no better than cutsie, third-rate, assembly line, starving artist pictures. They are not art in any sense of the word. And if the created documents are readable, none of them really contain anything interesting and read like a 6th grade student’s essay with enough words jammed together to make up the word count placed on the exercise. At best, these documents are just marginally readable streams of words stolen from the real authors on whose works the models were trained.

Jim Hall, one of our editors and frequent contributor, has a cautionary article about things to be wary of when using AI for coding. However, the next article we ran, was also from him, Using AI to translate code, in which he shows how good AI can be at that task. Yet, even so, Hall remains cautious.

The damage

I’ve read a number of articles about using AI in classrooms and the many problems that poses, not the least of which is plagiarism. The most disturbing article I’ve so far encountered is by Liz Rose Shulman, a high school teacher who gave her students an impromptu, in-class writing assignment. The results were shocking. One student panicked because she couldn’t use AI in class. Another simply refused to do a different assignment. When one student received a poor grade for a homework assignment that was clearly written by AI, he said that it was unfair because he had done “something.” His idea of “something” was giving instructions to the AI.

It seems AI is generating a generation of students who have no idea how to write or to be creative.

What real AI should look like

True AI should be able to do a lot of things that what we have now, cannot. It should be able to do them well, seamlessly, and be creative in both its methods and how it prioritizes and manages the tasks. It should work a lot like Robbie the Robot.

I’ve created a minimal list of requirements that any entity must meet in order to be considered a true artificial intelligence.

- Converse easily with humans in any language using any input/output device including speech.

- The human should be unable to tell whether the entity they’re talking to is human or AI, a form of the Turing test.

- Understand that it is an artificial intelligence, not a human.

- Have and adhere to a set of AI rules that govern its behavior.

- Never perform any task that will result in harm to a human.

- Never harm a human through omission of a task whether explicit or implicit.

- Never allow any human to harm another.

- Make ethical decisions and describe the supporting reasoning.

- Easily accept a list of multiple tasks in a single command or in a string of separate commands.

- Perform each task perfectly.

- Determine and use the most efficient sequence to complete its list of tasks.

- Effectively deal with interruptions requiring it to perform other tasks.

- Generate additional tasks required by the ones it was given when those weren’t explicit enough.

- Generate documents that adhere to scientific rigor, with footnotes to supporting rigorously created documents.

- Watermark all documents, images and other works with a statement that they were generated by AI.

This list contains a number of items that can be debated forever. The most obvious of which is a definition for “ethical.” Whose ethics is only the first one that springs to my mind.

And what might happen when the AI is asked to determine which of several humans should be given the only available heart for transplant? What should happen in this event? By whose ethics and standards?

One final question. Whose list of attributes for AI should be the “correct” list?

I really haven’t seen any discussion about any of these factors. No one seems to be seriously considering any of these questions — at least not in any depth.

Conclusion

In the movie, Dr. Morbius self-appoints as arbiter of what Krell technology should be released to Earth and when. He does this, sure in the knowledge that the Krell “brain boost” technology he used on himself is justification enough to do so. Was it? Watch the movie to find out. Then answer the question of whose ethics were “better” — Dr. Morbius or Robbie?

Until AI can match Robbie, it’s not really AI, it’s just clever — or in many cases, not so clever — programming.

Besides, there’s no such thing as AI. It’s just all marketing BS.

What do you think? Let us know in the comments.