Exploring Ollama and Llama 3.1 for Advanced Language Modeling

Everywhere you look, someone is talking or writing about artificial intelligence. I have been keenly interested in the topic since my graduate school days in the 1990s. I have used ChatGPT, Microsoft Copilot, Claude, Stable Diffusion, and other AI software to experiment with how this technology works and satisfy my innate curiosity. Recently, I discovered Ollama. Developed by Meta, it is an open-source large language model that can run locally on Linux, MacOS, and Microsoft Windows. There is a great deal of concern that while using LLMs in the cloud, your data is being scraped and reused by one of the major technology companies. Ollama is open-source and has an MIT license. Since Ollama runs locally, there is no danger that your work could end up in someone else’s LLM.

The Ollama website proclaims, “Get up and running with Large Language Models.” That invitation was all I needed to get started. Open a terminal on Linux and enter the following to install Ollama:

curl -fsSL https://ollama.com/install.sh | shThe project lists all the models that you can use, and I chose the first one in the list, Llama3.1. Installation is easy, and it did not take long to install the Llama3.1 model. I followed the instructions and, in the terminal, entered the following command:

$ ollama run llama3.1The model began to install, which took a couple of minutes. This could vary depending on your CPU and internet connection. I have an Intel i7 with 64 GB RAM and a robust internet connection. Once the model was downloaded, I was prompted to ‘talk’ with the LLM. I decided to ask a question about the history of my alma mater, St. Bonaventure University. I entered the following commands:

$ ollama run llama3.1

>>> What is the history of St. Bonaventure University?The results were good but somewhat inaccurate. “St. Bonaventure University is a private Franciscan university located in Olean, New York. The institution was founded by the Diocese of Buffalo and has a rich history dating back to 1856.” St. Bonaventure is located near Olean, New York, and it is in the Diocese of Buffalo, but it was founded in 1858. I asked the model to name some famous St. Bonaventure alumni; more inaccuracies were comic. Bob Lanier was a famous alumnus but Danny Ainge was not.

The results are rendered in MarkDown, which is a real plus. I also knew that having a GPU would render the results much quicker. I wanted to install Ollama on my M2 MacBook Air which I soon did. I followed the much easier directions: Download the Ollama-darwin.zip, unzip the archive, and double-click the Ollama icon. The program is installed in the MacBook’s Application folder. When the program is launched, it directs me to the Mac Terminal app, where I can enter the same commands I had entered on my Linux computer.

It is not surprising that Ollama uses a great deal of processing power, which is enhanced if you run it on a computer with a GPU. My Intel NUC 11 is a very powerful desktop computer with quad-core 11th Gen Intel Core i7-1165G7, 64 gigabytes of RAM, and a robust connection to the internet to download additional models. I posed similar questions to the Llama3.1 model first on the Intel running Linux and then on the M2 MacBook Air running MacOS. You can see the CPU utilization below on my Linux desktop. It’s pegged, and the output from the model is slow at an approximate rate of 50 words per minute. Contrast that with the M2 MacBook, which had a CPU utilization of approximately 6.9% and words per minute faster than I could read them.

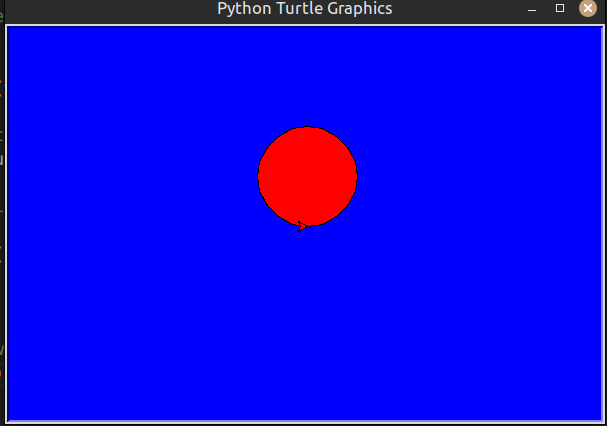

While Ollama Llama3.1 might not excel at history recall it does very well when asked to create Python code. I entered a prompt to create Python code to create a circle with the ‘turtle’ module.

>>> write a Python program to draw a red circle on a blue background with the turtle module.

# Import the turtle module

import turtle

# Set up screen with blue background and no event handling

win = turtle.Screen()

win.bgcolor('blue')

turtle.setup(width=600, height=400)

# Create turtle object

t = turtle.Turtle()

# Draw red circle

t.fillcolor('red') # set fill color to red

t.begin_fill() # start filling the shape

t.circle(50) # draw a circle with radius of 50 units

t.end_fill() # stop filling the shape

# Keep window open until closed by user

turtle.done()

I copied the code produced by Llama3.1 into a file with VSCodium, saved it, and ran it. The results are shown below.

Be sure to check out Ollama’s code repository on Github. Meta’s Ollama is a game-changer in AI, offering a versatile and accessible platform for running large language models. With its extensive library of models and user-friendly framework, Ollama empowers researchers, developers, and educators to push the boundaries of AI’s possible possibilities.