Linux hardware: The history of memory and storage devices

Buckle in for a trip back in time for a look at the early days of memory and storage, and how we got to where we are today

In the first two articles in this series, I looked at Computer history and modern computers for sysadmins and The central processing unit (CPU): Its components and functionality. I discussed memory and storage in both articles but merely as an adjunct to understanding how the CPU works. In this article, I delve more deeply into various types of memory and storage. I also examine why we have different memory types, such as RAM, cache, and hard drives (including solid-state devices (SSDs)).

The terms memory and storage tend to be used interchangeably in the technology industry, and the definitions of both in the Free Dictionary of Computing (FOLDOC) support this. Both can apply to any type of device in which data can be stored and later retrieved.

The ideal computer would have only one type of memory. It would be both fast and inexpensive, and provide random access to any location to read and write. Random access is much more flexible than serial access and provides a more rapid path to specific locations in memory. Every location is accessed as quickly as any other.

Volatility vs. cost and speed

Although storage devices have well-known attributes, such as capacity, that affect how a particular component is utilized in a computer, the most important distinguishing characteristics are speed and volatility.

Volatile memory only retains the data stored in it so long as power is applied. Random-access memory – RAM – comes in various speeds and sizes. It is made up of transistorized memory cells and is always volatile. It only retains the data stored in it so long as power is applied. Volatile memory can be quite fast.

Non-volatile memory retains the stored data even when power is removed. Disk drives are made of disks coated with magnetic material. The data is written using magnetic fields, and the resulting magnetic data are retained as microscopic magnetic bits in circular strips on the disk. Disks and other magnetic media are non-volatile and retain data even when power is removed. Non-volatile memory is significantly slower than volatile memory.

As we saw in the previous articles of this series, the CPU performs its task faster than most memory can keep up with. This results in using predictive analysis to move data through multiple layers of ever-faster cache memory to ensure that the needed data is available to the CPU when it is required.

This is all well and good, but faster memory has always been more expensive than slower memory. Volatile memory is also more costly than non-volatile. So far.

Early storage

One early non-volatile memory device was paper tape with holes punched in it and a teletype machine with a paper tape reader. This method had the advantage of being relatively inexpensive, but it was slow and fragile. The paper tapes could be damaged, so there were programs to duplicate paper tapes and patching kits. Typically, the program was stored on tape, and the data entered from the console or another tape. Output could be printed to the console or punched into another tape.

Punched cards were another early storage device. Because each card represented a record, much of the processing, such as extracting the desired data from a larger set, could be performed off-line on devices like sorters and collators. Punched cards were a more versatile extension of paper tape.

Volatile storage was crude and made up of banks of electro-mechanical relays. Such memory was very slow and expensive. Another early volatile storage medium more suited to main storage was the Cathode Ray Tube (CRT). Each CRT could store 256 bits of data at the cost of about US$1.00 per bit. Yes – per bit.

Sonic delay line memory was used for a while. One of the keypunch machine types I maintained while I worked for IBM used this type of memory. It was very limited in capacity and speed, and provided only sequential access rather than random. However, it worked well for its intended use.

Magnetic disk and tape drive storage developed rapidly in the 1950s, and IBM introduced RAMAC, the first commercial hard disk in 1956. This non-volatile storage unit used 50 magnetic disks on a single, rotating spindle to store about 3.75MB – yes, megabytes – of data. Renting at $750/month, the cost of this storage was about $0.47 per byte per month.

Magnetic disks are what I like to call semi-random access. Data is written in thousands of concentric circular tracks on the disk, and each track contains many sectors of data. To access the data in a specific sector and track the read/write heads must first seek to the correct track and then wait until the disk rotates so that the desired data sector comes under the head. As fast as today’s hard disk drives may seem, they are still quite slow compared to solid-state RAM.

Magnetic core memory was widely used in the 1950s and ’60s and offered random access. Its drawbacks were that it was expensive and slow. The cost of magnetic core memory started at $1.00 per bit but fell to about $0.01 per bit when the manufacturing of the core planes was moved to Asia.

When transistors were invented in the 1950s, computer memory – and other components – moved to the new semiconductor memory devices, which is also called solid-state. This type of memory is volatile, and the data is lost when power is removed.

By 1970, RAM had been compressed onto integrated circuit (IC) chips. As the ICs packed more memory into smaller spaces, it also became faster. The initial costs of IC memory were around $0.01 per bit or eight cents per byte.

The first solid-state drive (SSD) was demonstrated in 1991. SSD technology uses Flash RAM to store data on a non-volatile semiconductor memory device. This technology continues to be used today in SD memory cards and thumb drives. SSD devices are much faster than rotating disk hard drives but still significantly slower than the RAM used for main storage.

Today

Today’s hard drives can contain several terabytes of data on a magnetic, non-volatile recording medium. A typical 4TB hard drive can be purchased for about $95, so the cost works out to about $0.0000000023 per byte. Modern RAM can be had in enormous amounts, and it is very fast. RAM can cost as little as $89 for 16GB, which works out to about $0.00000055625 per byte. At that rate, 4TB of RAM costs about $22,250, so RAM storage is still around 250 times more expensive than hard drive storage.

Although not the primary subject of this article, we also have so-called off-line storage such as CD/DVD-ROM/RW devices, and removable media such as external hard drives and tape drives.

Different storage types working together

We have disk drives that store multiple terabytes of data in a non-volatile medium. They are slower than any type of semiconductor memory and do not provide true random access, so they are not suitable for use as main storage to which the CPU has direct access. We also have fast RAM, which is significantly more expensive than disk drive storage, but it is quick, and the CPU can have direct access to it. Thumb drives, CD/DVD, and other storage types are used to store data and programs when needed infrequently or perhaps only when the application is installed.

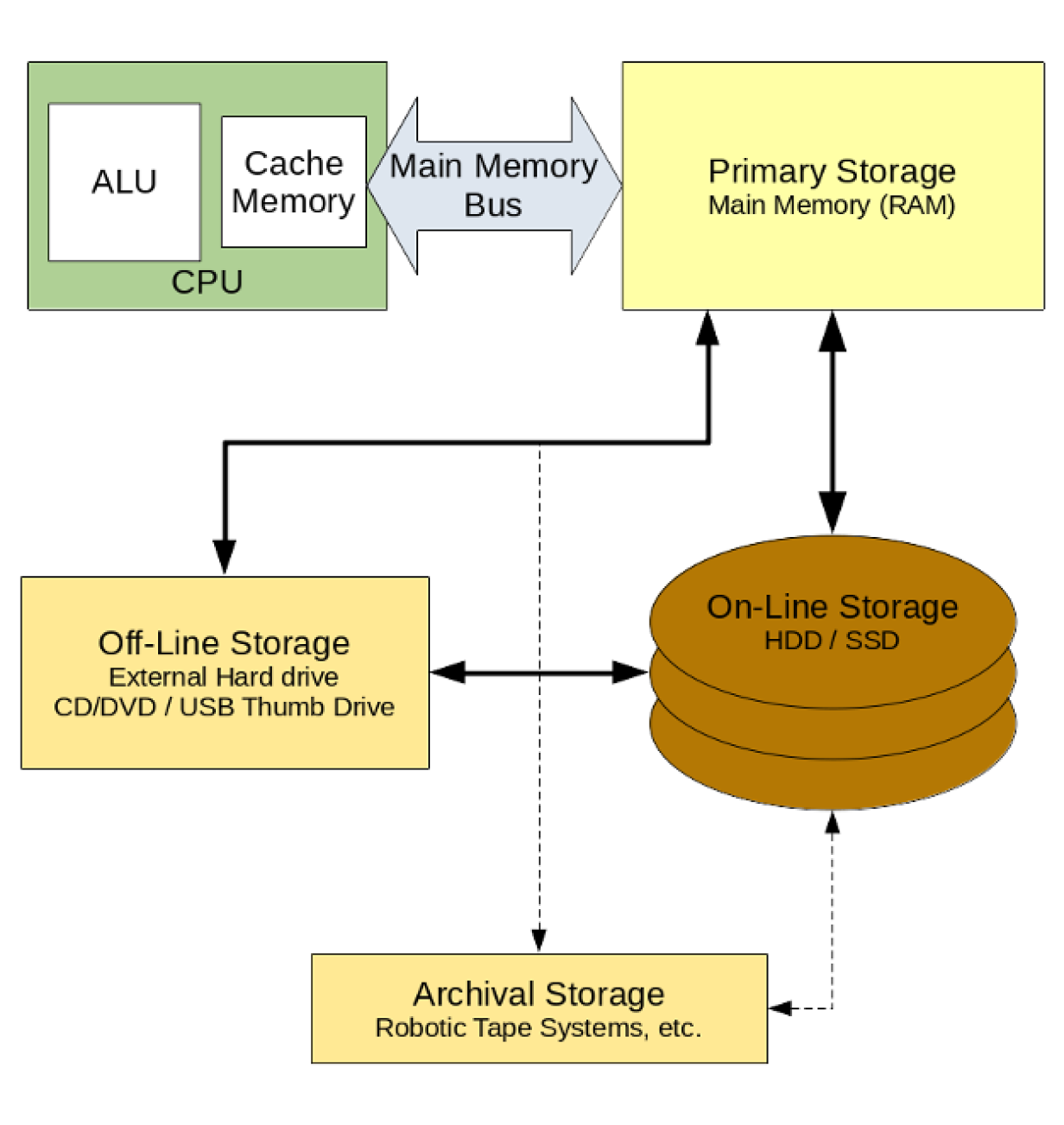

So our computers use a combination of storage types. We have online storage with gigabytes of RAM for storing programs and data while they are being worked on by the CPU. We have HDD or SSD devices that provide terabytes of hard drive space for long-term storage of data to be accessed at a moment’s notice. Figure 1 shows how this all works together.

The data closest to the CPU is stored in the cache, which is the fastest RAM in the system. It is also the most expensive memory. Primary storage is also RAM but not as quick or as costly as cache. When the CPU needs data – whether the program code or the data used by the program – it is provided by the cache when possible. If the data is not already in the cache, the CPU memory management unit locates it in RAM. It moves it into cache from which it can be fed to the CPU as instructions or data.

Program code and the data used by programs are kept on online storage such as a hard disk drive (HDD) or solid-state drive (SDD) until needed. Then they are loaded into RAM so the CPU can access them.

Archival storage is primarily used for long-term purposes such as backups and other data maintenance. The storage medium is typically high-capacity tapes, which are relatively inexpensive but are also the slowest for accessing the data stored on them. However, USB thumb drives and external drives can also be used for archival storage. Backups and archive data tend to be stored off-site in secure, geographically separate facilities, making them time-consuming to retrieve when needed.

The data from archival and off-line storage can be copied to the online storage device or directly into main storage. One excellent example of the latter is Fedora live USB thumb drives. The system is booted directly to these thumb drives because they are mounted and used just the same way as traditional hard drive storage.

Convergence

The storage picture I have described so far has been fluid since the beginning of the computer age. New devices are being developed all the time, and many that can be found in the historical time-lines of the Computer History Museum have dropped by the wayside. Have you ever heard of bubble memory?

As storage devices evolve, how storage is used also changes. We see the beginnings of a new approach to storage that is being led by phones, watches, tablets, and single-board computers (SBCs) such as Arduino and Raspberry Pi. None of these devices use hard drives for storage. They use at least two forms of RAM – one such as a micro-SD card for online storage and another faster RAM for main memory.

I fully expect the cost and speed of these two types of RAM to converge so that all RAM storage is the same: fast, inexpensive, and non-volatile. Solid-state storage devices with these characteristics will ultimately replace the current implementation of separate main and secondary storage.